Introduction

OpenAI Sora is once again in the spotlight, creating a stir with its very first commissioned music video, “Washed Out – The Hardest Part”. This astonishing creation is composed of 55 individual clips, all generated by Sora itself. It might seem almost too amazing to be real, but it is! Back in February 2024, Sora amazed the world by demonstrating its incredible ability to produce high – definition videos from simple text prompts. At the heart of this remarkable technology is the Diffusion Transformer (DiT) architecture. Let’s explore this magical technology further.

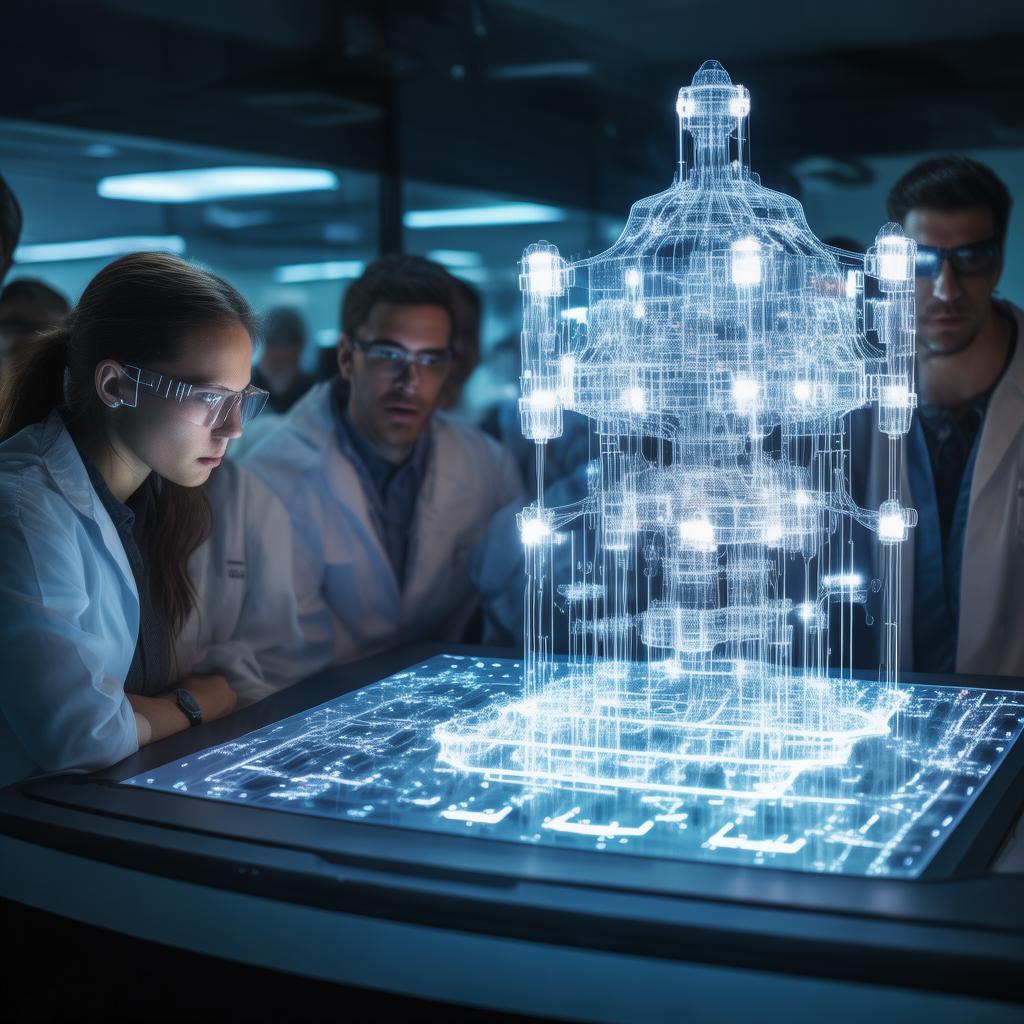

Diffusion Transformer (DiT) = Diffusion + Transformers

The core of Sora is the DiT architecture, a new approach to generative modeling. It combines the power of diffusion models and transformers to achieve outstanding results in image generation. Let’s take a look at its key components.

Diffusion Models

Diffusion models are a type of generative model that learn to gradually remove noise from a noisy input signal to create a clean output. In the context of image generation, they start with a noisy image and refine it step – by – step until a clear and realistic image appears. This process enables the generation of highly detailed images.

Transformers

Transformers are a neural network architecture that has revolutionized natural language processing. In Sora, they are used to process and understand the textual descriptions given as input, allowing the model to generate images that match the prompt accurately.

Integration of Diffusion Models and Transformers

The DiT architecture combines diffusion models and transformers to use their respective strengths. The transformer processes the textual input and creates a latent representation that captures the semantic meaning. This representation then guides the diffusion process, ensuring the generated image aligns with the text. Sora is trained on a large dataset of image – text pairs, learning the relationships between visual and textual information.

How does DiT work in Sora?

When generating a video using a text prompt and diffusion steps, the process is as follows. First, we have a video clip and a prompt like “sora is sky”. The video is divided into patches, and each patch is processed by a visual encoder to extract features. Noise is added based on the diffusion step, and the prompt is converted into a text embedding vector. The diffusion step is also encoded, and these are combined to estimate scale and shift values for the noisy latent features. The conditioned noise latent is then processed by a Transformer block, and the model is trained by predicting the original noise. After training, the model subtracts the predicted noise to get a noise – free latent, which is decoded to generate the final video.

Benefits of DiT in Sora

The DiT architecture offers several advantages to Sora. It improves expressiveness, allowing Sora to learn a more flexible representation of input data. It enhances generalization, enabling Sora to handle unseen data better. It also increases robustness, making Sora more resistant to perturbations. Additionally, it is highly scalable, suitable for large – scale models like Sora.

DiT represents a major advancement in AI – powered video generation. Although OpenAI keeps many details of Sora under wraps, its capabilities hint at a bright future for this technology, with the potential to revolutionize multiple fields.