Introduction

Biological neurons play a crucial role in the research of artificial neural networks. They are the complex structures that underlie the functions of the brain. A biological neuron is composed of parts like the soma, axons, dendrites, and synapses, all of which contribute to the processing of information. The McCulloch – Pitts Neuron, an early computational model, attempts to mimic the basic operations of these biological units. This article delves into the fundamental aspects of the McCulloch – Pitts Neuron, including its operational principles, structure, and its impact on the field.

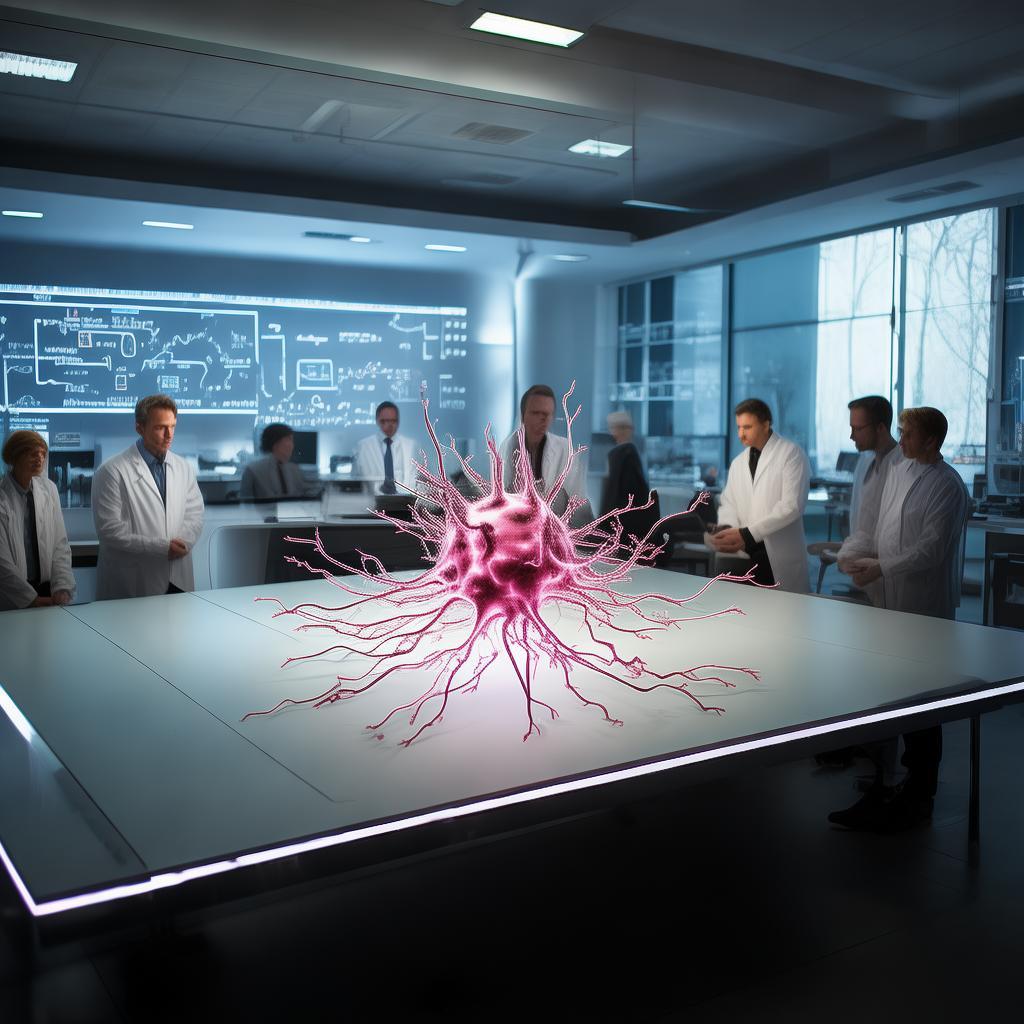

Biological Neurons: The Brain’s Building Blocks

Biological neurons are the fundamental units of the brain. The dendrite receives signals from other neurons, the soma processes this information, the axon transmits the output to other neurons, and the synapse serves as the connection points to other neurons. In essence, a neuron functions like a miniature biological computer, taking in input signals, processing them, and then passing on the output.

The McCulloch – Pitts Neuron: A Pioneering Model

The McCulloch – Pitts Neuron is the first computational model of a neuron. It has two main components. Aggregation involves the neuron gathering multiple boolean inputs (either 0 or 1). Then, the threshold decision is made based on the aggregated value, using a threshold function.

For example, consider predicting whether to watch a football game. Inputs such as “Is Premier League on?” (1 for yes, 0 for no), “Is it a friendly game?” (1 for yes, 0 for no), “Are you not at home?” (1 for yes, 0 for no), and “Is Manchester United playing?” (1 for yes, 0 for no) can be used. Some inputs can be inhibitory, like “Are you not at home?” since you can’t watch the game if you’re not home.

Thresholding Logic

The McCulloch – Pitts Neuron fires (outputs 1) when the aggregated sum of inputs meets or exceeds a threshold value (θ). For instance, if you decide to watch the game only when at least two conditions are met, then θ is set to 2. It’s important to note that this is a foundational model that uses binary inputs (0 or 1) and lacks learning mechanisms, which were added in later models.

Boolean Functions with the McCulloch – Pitts Neuron

The McCulloch – Pitts Neuron can represent various boolean functions. For the AND function, it fires when all inputs are ON (e.g., ( x1 + x2 + x3 >= 3 )). The OR function fires when any input is ON (( x1 + x2 + x3 >= 1 )). There are also inhibitory input functions, like firing only when specific conditions are met (e.g., ( x1 ) AND NOT ( x2 )), the NOR function which fires when all inputs are OFF, and the NOT function which inverts the input.

Geometric Interpretation

The McCulloch – Pitts Neuron can be geometrically visualized by plotting inputs in a multi – dimensional space and drawing a decision boundary. For the OR function in 2D, the decision boundary is a line (( x1 + x2 = 1 )), and for the AND function, it is a line (( x1 + x2 = 2 )). In higher dimensions with more inputs, the decision boundary becomes a plane.

Limitations of the McCulloch – Pitts Neuron

Although it was a pioneering model, the McCulloch – Pitts Neuron has several limitations. It cannot handle non – boolean inputs, requires manual setting of thresholds, treats all inputs equally without a weighting mechanism, and is unable to handle functions that are not linearly separable, such as the XOR function. These limitations led to the development of more advanced models like the perceptron by Frank Rosenblatt in 1958, which introduced learning mechanisms for weights and thresholds.

Conclusion

The McCulloch – Pitts Neuron marked the starting point of neural network research. While it can represent simple boolean functions and provides a geometric view of decision boundaries, its limitations spurred the creation of more sophisticated models. The journey from the McCulloch – Pitts Neuron to modern neural networks showcases the evolution of our understanding and capabilities in artificial intelligence.