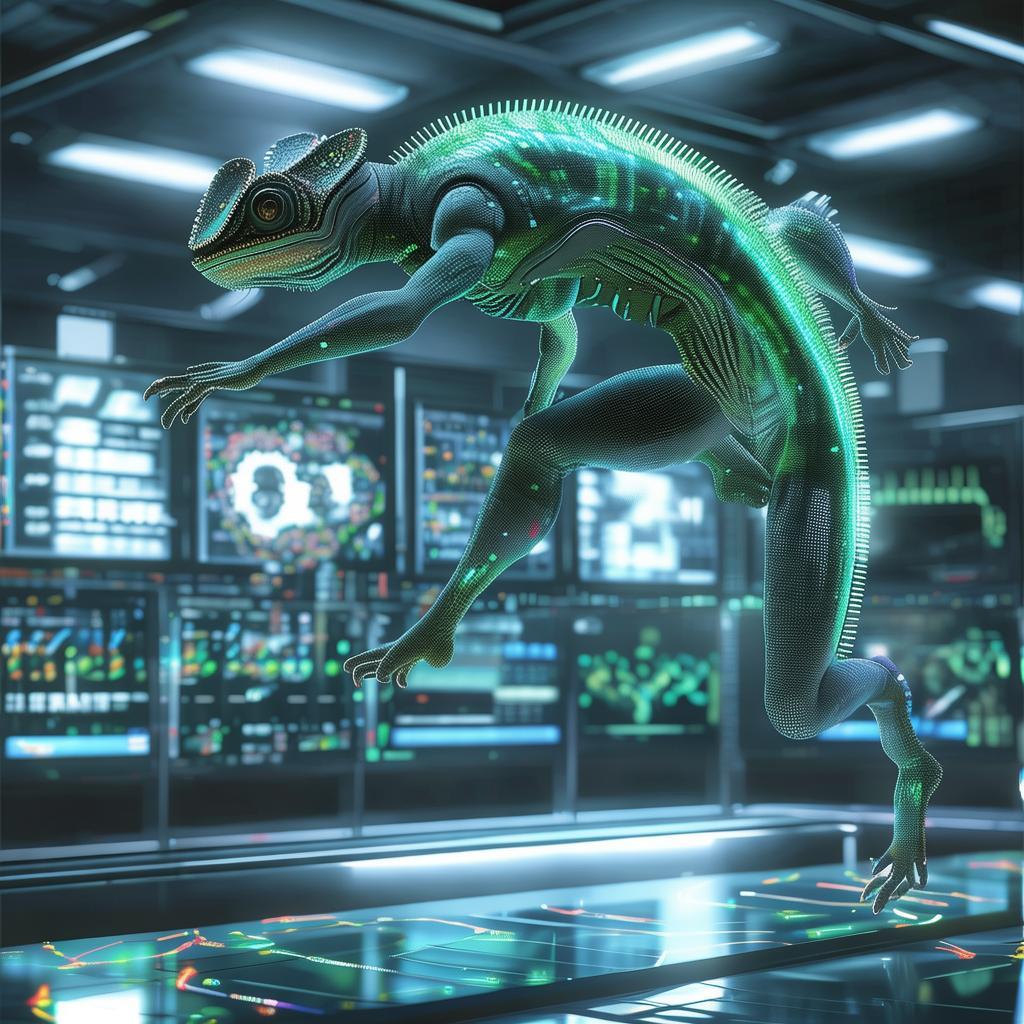

Meta Unveils Advanced Multimodal LLM – Chameleon

Meta has made a significant announcement with the introduction of Chameleon, an advanced multimodal large language model. This new development positions Meta as a strong player in the artificial – intelligence arena. Chameleon is designed with an early – fusion token – based mixed – modal architecture, a feature that sets it apart from traditional models.

Understanding Chameleon’s Unique Architecture

Chameleon’s architecture is innovative in that it integrates text, images, and other inputs right from the start, unlike the late – fusion approach. It uses a unified token space where it can reason over and generate interleaved sequences of text and images seamlessly. By encoding images into discrete tokens similar to words, it creates a mixed vocabulary including text, code, and image tokens. This allows the application of the same transformer architecture to sequences with both image and text tokens, enhancing its multimodal task – performing ability.

Training Innovations for Chameleon

Training a model like Chameleon is no easy feat. Meta’s team overcame the challenges with several architectural enhancements and training techniques. They developed a new image tokenizer and used methods like QK – Norm, dropout, and z – loss regularization. A high – quality dataset of 4.4 trillion tokens was curated for training. The training occurred in two stages with different parameter – sized versions of the model, spanning over 5 million hours on Nvidia A100 80GB GPUs.

Chameleon’s Impressive Performance

In vision – language tasks such as image captioning and visual question answering (VQA), Chameleon outperforms models like Flamingo – 80B and IDEFICS – 80B. It also holds its own in pure text tasks, achieving state – of – the – art performance levels. What’s more, it can generate mixed – modal responses with interleaved text and images, and it does so with fewer in – context training examples and smaller model sizes, showcasing its efficiency.

Future Prospects of Chameleon

Meta views Chameleon as a significant step towards unified multimodal AI. The company plans to explore integrating additional modalities like audio to further enhance its capabilities. In fields like robotics, Chameleon’s early – fusion architecture could lead to the development of more advanced and responsive AI – driven robots. Its ability to handle multimodal inputs also paves the way for more sophisticated interactions and applications in various industries.

Overall, Meta’s Chameleon marks an exciting development in the multimodal LLM space. As Meta continues to improve and expand its capabilities, it has the potential to set a new standard in AI models for processing diverse types of information.